Posted: July 30, 2014, 11:12 a.m. by Rachel Stone

The Making of Charlemagne’s Europe team gave several presentations at the International Medieval Congress 2014 at Leeds. One of the comments raised in discussions afterwards was about the interconnectivity of the Charlemagne database with other databases; one of the people who raised this was Florence Codine-Trécourt from the Bibliothèque nationale de France, Department of Coins, Medals and Antiques. She was talking about current efforts to ensure the interconnectivity of coin databases, but she’s also working within the context of the humanities institutions who have always taken interconnectivity the most seriously: libraries. One of the most useful databases to which I have access is one of the great triumphs of interconnectivity: COPAC, which connects together the library catalogues of more than 80 research libraries in the UK and Ireland.

As an ex-librarian myself (and someone who’s also worked as a librarian in the coin department of a museum), I’m a fan of interconnectivity generally. And the possibilities of interconnectivity for some forms of historical work are now dizzying. But looking at the specific data we’re working with for this project, I’m not convinced that interconnectivity is going to get us an awful long way. In this post I’m therefore approaching interconnectivity from the bottom-up, thinking about various kinds of data we might be interested in trying to connect together and the chances of being able to do so in practice.

Finding items that are the same

One of the most basic aspects of interconnectivity for coin and bibliographical databases is being able to connect together examples of the "same thing" that appear in different collections. For books and other media collected in libraries, the "same thing" is conceptually represented by the FRBR standard, which distinguishes between a work (as an intellectual creation), an expression (specific form), a manifestation (physical embodiment) and an item (single exemplar). This means that you can start as a user from wanting Charles Darwin’s Origin of the Species and end up holding in your hand one particular copy of a 1950 edition held by King’s College London.

I’m not as well up on museum conceptual standards such as CIDOC CRM, but they do exist, and it’s intuitively easy to see that with coins you’ve got the same kind of move from abstract categories (Charlemagne’s denier) to a number of single exemplars (a particular coin in a collection). In other words, coins have always been a mass-produced item, like printed books, and it’s therefore useful to be able to find (or choose from) all the ones which are almost exactly the same.

With charters, however, when you get down to individual items, you start off with either one or two physical items (depending on whether both sides of a transaction get a copy). You might get a few additional copies in cartularies and you might get several different editions of the same charter text but you’re never looking at large numbers of records you need to connect together. Complex levels of abstraction concerning levels of creation in charter databases are therefore not normally included because they’re just not required for linkage.

Subject access

If you look at the COPAC search screens, what you will see is that most of the search options concern finding specific books or authors. There’s one option for subject search and one for keyword (finding any word anywhere), but they’re subordinated. There’s a similar pattern if you look at the fields used by MESA (Medieval Electronic Scholarly Alliance), which carries out federated searches of medieval digital objects (manuscripts etc).

This is because subject searching is not reliable. There have been numerous studies of indexing over the years (see e.g. the references in Concha Soler Monreal and Isidoro Gil-Leiva, "Evaluation of controlled vocabularies by inter-indexer consistency", Information Research 16 (4), December 2011), and they all show similar results: in general, you’re doing well if you get 50% consistency in assigning index terms with expert indexers and a shared thesaurus/controlled vocabulary.

You might be able to do slightly better on consistency if you have a very small group of indexers working in a narrow subject field (although intra-indexer consistency is also a known problem, but subject searching across humanities databases produced by different organisations with slightly different users in mind is more akin to typing terms into Google than anything systematic. You may well find something of interest, but there’s an awful lot you’ll miss.

Diplomatic

There are a number of other charter database projects being developed at the moment (see our list of links for some examples). To what extent would it be possible to allow interconnection with them?

One important issue is that the Charlemagne project, unlike some other projects, is not much concerned with diplomatic (the study of the transmission and form of charters). Our focus is primarily on the content. A quick look at the Charters Encoding Initiative and their copy of the Vocabulaire international de la diplomatique shows just how much standardisation work would be required to generate authority lists that would adequately reflect our interest in the types of transaction recorded in charters and the roles played in carrying them out as well as the traditional diplomatic emphasis on types of document and the roles played in generating them. For example, the VID doesn’t have an entry for manumissions and while they include various forms of donation as entries, they don’t have an entry for "donor".

It might be possible to create an agreed standard for such matters, but it wouldn’t be an easy process. And even then, applying such standards consistently would be very difficult. Our team recently had a long argument about whether one particular document (Farfa 2:163) was a precarial grant or not, since it included the term "precaria", but involved Farfa granting out in lease to one person property previously given to it by another. Similarly, there is no clear dividing line between sales and donations with counter-gifts. I’m not yet convinced that the effort involved in standardisation would allow sufficient precision and recall to make cross-searching databases reliable.

People and organisations

Interconnectivity of information about people (and organisations) is one of the most obvious possibilities for almost any database. Authority lists for personal names such as those created by the Library of Congress have existed for a long time, and there are also new initiatives such as SNAP:DRGN, which aims to connect together existing classical prosopographies.

We have made provision for interconnectivity for this aspect of the Charlemagne database. Records for individuals include, where possible, the reference for the same person from the Nomen et gens database. Our choice of this specific database and our lack of engagement with others reflects both the nature of prosopographical data generally and the particular characteristics of our dataset.

One of the most basic facts about people is that they die: man is a mortal animal. Therefore, people are only going to reappear in sources within a relatively short timespan. I can almost guarantee that the datasets concerning the classical world potentially connected by SNAP:DRGN will contain no references to people who appear in our charters. Nomen et gens is only of use to us because it is primarily an onomastics database and therefore collects name forms across a wide range of centuries, including the eighth and ninth century, which we focus on.

Would interconnectivity with other (hypothetical) early medieval databases be of more use to us? The problem here is that as compared to databases which focus on authors, officials or coins, our data on people is inevitably going to have a distribution with an unusually long tail.

Many authors (or creators of creative works more generally) produce more than one book/film/album etc. Most officials (even relatively humble ones like priests) will have a sequence of offices held. Most issuers of coins will issue more than one type and design of coin. In contrast, the vast majority of people mentioned in our database appear in very few charters. So far, with around 7000 agents (people and organisations) entered into the system, we have around 97% appear in five charters or less. I suspect if we broke that figure down further, we’d have an even more stark contrast; predominantly, Carolingian people are connected only with one charter.

As this indicates, the people we’re dealing with are therefore mostly very minor actors as far as the surviving documentary record goes. This is a function of this particular period: once you get (surviving) censuses, as in Victorian England, you’re likely to have at least one source available for all but the most marginal figures, which you can cross-check with other sources. Even if you just have substantially preserved sets of tax records, you’re likely to be able to pick up the majority of property-holders. But for Carolingianists, the charters are the most granular source we have: they give us far more details about lower levels of society than narrative sources or even confraternity books.

The local figures who make up the vast majority of our people count will show up in the charters for their region or not at all. We can expend effort on building crosswalks between databases for personal data, but there is going to be very little data actually there to carry across. It’s for that reasons that work on interconnection of people doesn’t seem a useful priority for our project.

Places

People die; the land endures. Or to put it more prosaically, there’s a lot more continuity of places than there is of people. Our data will often refer to locations that would have been familiar to the Romans; it may be searched by users who are sitting at their computer in roughly the same spot. The problem with places tends not to be persistent existence (although there are some abandoned or relocated settlements); the problem is persistent identification. How do we match up a place name in a particular medieval charter to a stable identifier? And what counts as a stable identifier for places, anyhow?

The traditional method for persistent identification of medieval places has been the use of modern place names, but that doesn’t really work in the longer term. I’m currently working with the standard edition of Charlemagne’s charters, which dates from 1906; this gives "modern" names in their German form and uses the administrative borders and units existing at the time, when Prussia was still a region of Germany and Alsace wasn’t yet part of France. I’m still not entirely sure how our database is going to cope with the reorganised Italian provinces from 2014 onwards. And individual place names themselves change: Châlons-sur-Marne, for example, became Châlons-en-Champagne in 1998.

Most projects creating stable identifiers for medieval places therefore now use geo-coordinates as well. The projects I’m most aware of are Pleiades and Regnum Francorum online, both of which are part of a wider network, Pelagios. While these all look very promising, I’m not yet sure that their medieval place to modern place linking procedures are sufficiently robust to make them suitable for direct use by the project.

To explain that, let’s look at the Pleiades model for linking places (found on their help pages. This takes the following form:

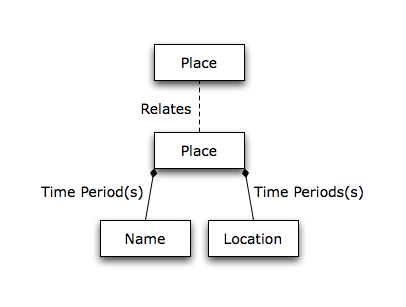

In contrast, here is our conceptual model:

The key difference is in how we know that a medieval place and a modern place are related. We are (implicitly and occasionally explicitly) citing either the editor of the charter in which the place is first found or other scholarship on its identification. Our identification processes aren’t as transparent as they should be, but other projects seem to make them effectively opaque. For example, the Regnum Francorum project has geolocated places from charter Wissembourg 19. Erbenuuilare is identified as Hermerswiller, an identification that the latest editor of the Wissembourg cartulary explicitly rejects. It’s not clear to me how the identification is made in RFO or in other community-sourced models.

While this is an argument against using such sources as standardised place-names references at the moment, there is already a possible way of allowing some interconnectivity for place name searching. Any medieval place name that is geo-located in the Charlemagne database could potentially be searched by its geo-coordinates; so could any place that is geo-located in these other systems. A geo-coordinate search, set up to allow a certain tolerance (i.e. anything within 1 km of certain co-ordinates) could potentially be used across a number of different systems.

This obviously wouldn’t find medieval places that we haven’t been able to geolocate, but since the majority of these are unidentified places known only from 1 or 2 documents, the chances of us being able to match them to references in classical texts (the main source for Pleiades) is negligible anyhow. Cross-searching by geo-coordinates with fuzzy matching may well be the most effective method for interconnecting databases of ancient and medieval historical places; explicit referencing of place-names may not be necessary.

Overall, then, interconnectivity isn’t a current priority of our project, because of the data that underlies it, but there are potential ways to take the data we already provide and interconnect it elsewhere.

(Comments)

Comments